It is defined as the number of bits used to accumulate one pixel of the image data. For gray scale image Bits per Pixel is 8 bits and for a colour image BPP is 24 bits.

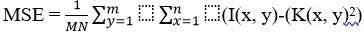

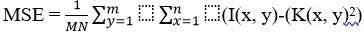

c) Mean Square Error

Typically, Mean Square Error also called as an average prediction error, it determines the clarity of an image. It is calculated as the average of difference between the decompressed and original image. A Higher value of MSE gives a poor quality image.

Where I is an original image, K is an approximation of decompressed image and m, n are pixels of the image. Its lower value indicates better picture quality.

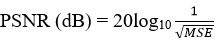

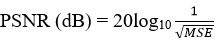

d) Peak Signal Noise Ratio

Peak Signal Noise Ratio is a measure of a peak error. Peak Signal Noise Ratio is casually expressed in terms of the logarithmic decibel scale in dB. MSE and Peak Signal Noise Ratio is a very helpful parameter to compare the image data compression quality.

Higher PSNR value gives better quality of reconstructed image.

Conclusion

This paper presents different kinds of image compression techniques. Basically two types of techniques. One is lossless technique. After study of all techniques, lossless image compression techniques are most effective techniques among the lossy compression techniques. Lossy Image Compression provides a higher compression ratio than lossless. The survey makes clear that, the field will continue to interest researchers in the days to come.

References

1. Vijayvargiya , Silakari S., & Pandey R (2013). A survey: Various techniques of image compression. International Journal of Computer Science and Information Security, 11(10).

2. Vrindavanam J., Chandran S., & Mahanti G.K. (2012). A survey of image compression methods. International Conference and Workshop on Recent Trends in Technology, (TCET), pp. 12-17.

3. Neelam & Bansal A. (2014). Image compression a learning approach : International Journal of Computer Science Trends and Technology, 2(4), 60–66.

4. Suganya M., Ramachandran A., Venugopal D., & Sivanantha Raja A. (2014). Lossless compression and efficient reconstruction of colour medical images. International Journal of Innovative Research in Computer 5. and Communication Engineering, 2(Special Issue 1), 1271–1278.

5. Jadhav T., Patil M., & Dandawate Y. (2015). Image compression using mean removed and multistage vector quantization in wavelet domain. International Journal of Modern Trends in Engineering and Research, 1299–1306.

6. Kumar T., & Kumar (2015). Medical image compression using hybrid techniques of DWT, DCT and huffman coding. International Journal of Innovative Research in Electrical, Electronics, Instrumentation and Control Engineering, 3(2), 54-60.

7. Saravanan (2013). Medical image compression using curvelet transform. International Journal of Engineering Research and Technology, 2(12), 2196–2202.

8. Jenny C.T., & Muthulakshmi G. (2010). A modified embedded zero-tree wavelet method for medical image compression. ICTACT Journal on Image and Video Processing, 02, 87-91.

90. Kaur A., & Goyal M. (2014). ROI based image compression of medical images. International Journal of Computer Science Trends and Technology, 2(5), 162-166.

10. Ruchika, Singh M., & Singh A.R. (2012). Compression of medical images using wavelet transforms. International Journal of Soft Computing and Engineering, 2(2), 339 -343.

11. Mahmudul Hassan, & Wang Xuefeng. (2022). The challenges and prospects of inland waterway transportation system of Bangladesh. International Journal of Engineering and Management Research, 12(1), 132-143.

©

©