Introduction

The ability of a computer to display "intelligence" is the simplest definition of artificial intelligence. John Mc Carthy, considered the inventor of artificial intelligence, defined it as "the science and engineering of creating intelligent machines, primarily intelligent computer programmes." Making machines seem to have human intelligence is the large and crucial field of artificial intelligence in computer science. Artificial intelligence is a subject of research, not a system. AI research's beating heart and soul are knowledge engineering. If machines had access to a wealth of information about the world, they could be programmed to behave and respond like people.

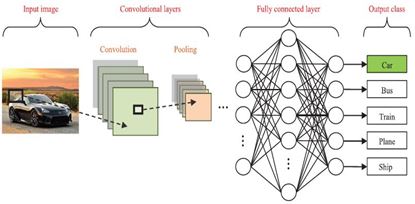

Computer Learning

An aspect of artificial intelligence is machine learning. Data science has a subfield called "Machine Learning" that allows computers to "learn" without having to be explicitly trained by humans. The Machine Learning model creates patterns by dissecting the historical data known as "training data" and uses these patterns to learn and predict the future. Such forecasts provided by ML models are becoming more accurate every day. Since the past decade, machine learning has helped us with self-driving cars, speech recognition, effective web search, and a significantly enhanced knowledge of the human social structure.

These days, machine learning is so pervasive that many people utilise it without even realising it. It is also seen by many researchers as the most effective path towards AI that can compete with humans. The success of a machine depends on two factors: how much abstraction data speculation occurs, and how well the computer can use what it has learned to forecast the future. The goal of machine learning, which is closely related to the goal of AI, is to fully comprehend the concept of learning, including human learning and other types of learning, about the computational aspects of learning behaviours, and to instill the ability to learn in computer systems. Machine learning, which has applications in many fields of science, engineering, and society, is at the heart of artificial intelligence's success.

Techniques for Machine Learning

Nowadays, machine learning is employed extensively across all industries, even though some of these applications might not always be obvious. Machine learning's primary methods include:

1. Classification: Classification relies on training data, observations with established categories, and predicts the category to which a new observation belongs. For instance, classifying the price of a house into the categories of very expensive, costly, affordable, cheap, or very cheap.

2. Regression: It uses a continuous data set to forecast a value. For instance, estimating the cost of a property depending on its location, time of purchase, size, etc.

3. Clustering: A set of data is divided into subsets (i.e., clusters) using the clustering approach so that the observations belonging to the same cluster are related in some way. Using Netflix as an example, viewers can be grouped into distinct groups based on their viewing preferences.

4. Recommendation Systems: It use machine learning algorithms to assist users in discovering new goods and services based on information about the user, the good or service, or both. For instance, YouTube may propose a certain movie based on user viewing habits, while Amazon may recommend things based on sales volume. Identifying observations that do not fit an expected pattern or other dataset items is known as anomaly detection. For instance, a credit card transaction outlier (or abnormality) may indicate possible banking fraud.

Dimensionality Reduction: The procedure of reducing the number of randomly generated variables under investigation to get a set of variables that are statistically significant. The three main categories of machine learning algorithms are as follows.

Predictive Models: are used in supervised learning to make predictions about the future based on the historical data that is now accessible. In this technique, each training example has a pair that consists of a supervisory signal—a desired output value—and an input object, which is commonly a vector. To learn the mapping function between the input and output objects, many approaches are utilised. In supervised learning, there are two categories: classification and regression. Regression problems arise when the output variable is a real value, such as "rupees," "weight," or "temperature," as opposed to classification problems, which arise when the output variable is a category, such as "white" or "black" and "dog" or "cat."

Nevertheless, this technique also utilised a number of additional strategies, including support vector regression (SVR), Gaussian process regression (GPR), neural networks, naive bayes, and support vector machines, among others. Image categorization, identity theft detection, weather forecasting, and other common supervised learning applications

Descriptive Models and Unsupervised Learning

The unsupervised learning challenge entails inferring a function to describe the unlabeled data, wherein classification or categorization is not included in the observation. Unsupervised learning differs from supervised and reinforcement learning in that the output of the applicable algorithm is inaccurate when the examples of learners provided are unlabeled. Clustering and association are additional ways to categorise unsupervised learning. Inherent groups of the data, such as student heights in a class or school, are what clustering is all about. However, association rules are about to create intriguing connections between datasets and variables. Applications of unsupervised learning in the real world include NASA remote sensing, micro UAVs, nano camera manufacturing technology, and others.

©

©