A Perceiving and Recognizing Automaton Prediction for Stock Market

Tere OP1*, Kshatriya D2

DOI:10.54741/asejar.2.2.4

1* Om Prakash Tere, Student, Department of Civil Engineering, Dr DY Patil College of Engineering, Pmpri, Pune, India.

2 Domesh Kshatriya, Guide, Department of Civil Engineering, Dr DY Patil College of Engineering, Pmpri, Pune, India.

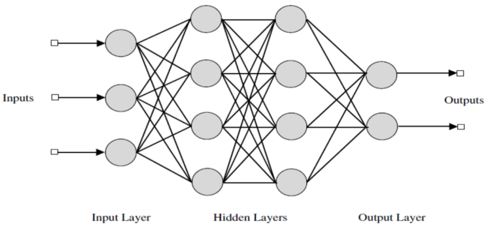

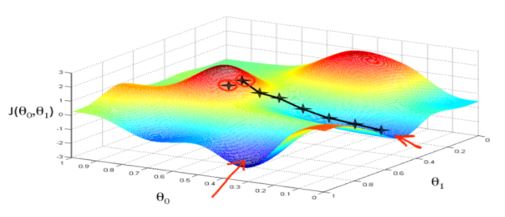

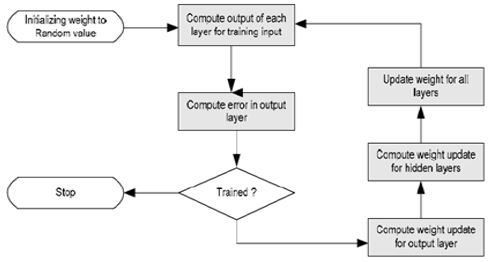

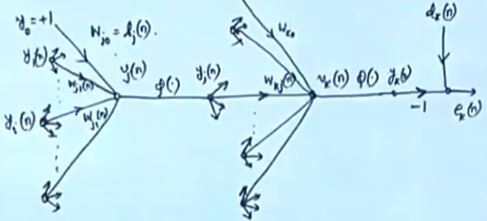

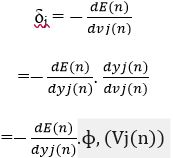

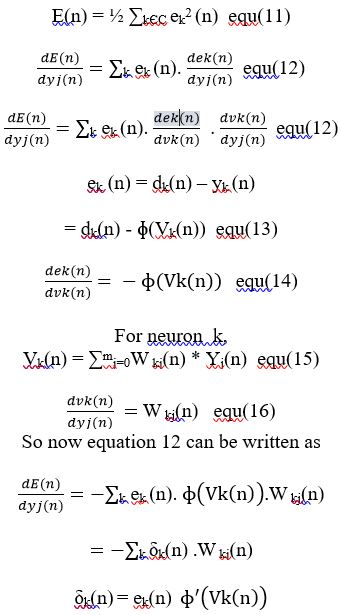

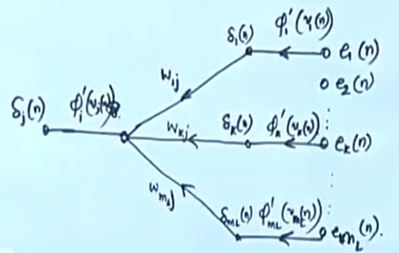

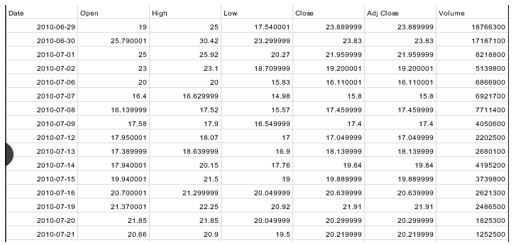

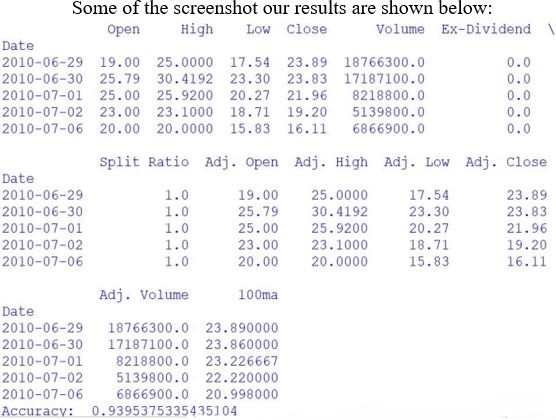

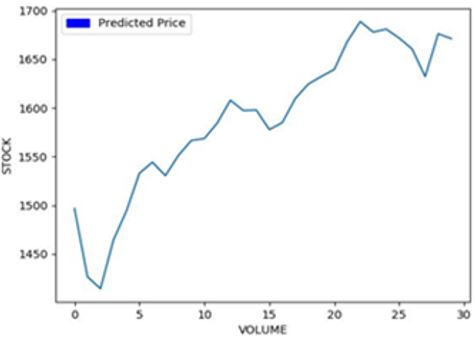

The skill of forecasting the value of a company's equity on the stock market In order to forecast stock market prices, this research suggests a machine-learning (ML) artificial neural network model. The back-propogation algorithm is integrated into the suggested algorithm. Here, we use the back-propogation algorithm to train our ANN network model. Additionally, we conducted research using the TESLA dataset for this publication.

Keywords: back-propogation, artificial neural network, stock-market prediction

| Corresponding Author | How to Cite this Article | To Browse |

|---|---|---|

| , Student, Department of Civil Engineering, Dr DY Patil College of Engineering, Pmpri, Pune, India. Email: |

Tere OP, Kshatriya D, A Perceiving and Recognizing Automaton Prediction for Stock Market. Appl. Sci. Eng. J. Adv. Res.. 2023;2(2):19-25. Available From https://asejar.singhpublication.com/index.php/ojs/article/view/47 |

©

©