The proposed fusion approach is evaluated according to image classification benchmark data sets, CIFAR-10, NORB, and SVHN. This paper shows that approach that is proposed improves reported performances of existing models by 0.38%, 3,21% and 0.13% respectively.

COMPARISON OF CNN WITH OTHER CLASSIFICATION ALGORITHMS

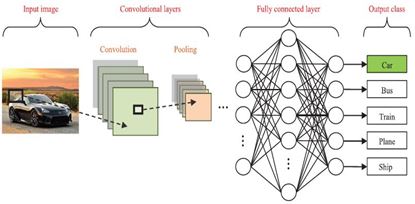

In practice decision of choosing classifier actually depends on dataset and general complexity of problem. Setting deep learning is more tedious than using other classifiers such as random forest, SVM’s but it shines in complex problems like image classification, natural language processing , speech recognition. Even it makes us to worry less about feature engineering. CNN are neural networks which use an optimization method to train network while generic algorithms are a class of optimization methods which can’t learn anything. CNN are a specialized type of ANN which can either be deep or not depending on type of model. Adding up layer to CNN network increases model complexity on contrary no such method is available in svm to increase model complexity. CNN is used when we large amount of data is available. SVM is used for less data[25]. CNN uses comparatively little pre-processing than other image classification algorithms. The CNN has an inbuilt advantage over traditional methods to solve computer vision problems that is learning hierarchical features i.e what features are useful and how to compute them. CNN is better than RNN in a way because CNN have filters which act like feature detectors which somewhat mimic human visual system, in other words we can say convnet is suited for image domain. Usually after training CNN it is very fast to classify or predict something.

Proposed Work

Fishing industries are among largest food industries on planet and one of main pillars of economy for coastal countries. The approach is to develop a model using convolutional neural network to automatically detect and classify different species of fishes. To safeguard this fishery for future, organizations are using cameras to monitor fishing activities. The video then will be sliced down into images and using these images as an input to model.

Conclusion

Convolutional neural networks perform better than other state-of-the-art methods on larger dataset.

It not only extracts the features automatically which reduces the workload of manual feature extraction but also classifies the images within the shorter amount of time. Classical decision tree, bagging, boosting, random forest, SVM, are other methods evaluated on different image classification problems. Random forest can classify more accurately but on a small set of data[18]. CNN is being advantageous since it is able to learn optimal features from images adaptively. Comparing with other image classification algorithms experimental findings revealed there is substantial increase in the accuracy using CNN[16].

References

1. Wei Wang, Gang Chen, & Haibo Chen. (2016). Deep learning at scale and at ease.ACM Transactions on Multimedia Computing, Communications, and Applications (TOMM). 12(4s), 69-94.

2. Yann LeCun, Yoshua Bengio, & Geoffrey Hinton. (2015). Deep learning. Nature, 521(1), 436-444.

3. Geoffrey E. Hinton, Simon Osindero, & Yee-Whye Teh. (2006). A fast learning algorithm for deep belief nets.Neural Computation, 18(1), 1527–1554.

4. Li Deng, & Dong Yu. (2013). Deep learning methods and applications.Foundations and Trends in Signal Processing, 7(3-4), 197-387.

5. Tom M. Mitchell. (2006). The discipline of machine learning.CMU-ML, 06(1), 108.

6. [6] Geoffrey Hinton. (2013).Where do features come from?. Canada: Department of Computer Science, University of Toronto, pp. 1-33.

7. Rich Caruana, & Alexandru Niculescu-Mizil. (2006). An empirical comparison of supervised learning algorithms.International Conference on Machine Learning, Pittsburgh, PA, 23(1), pp. 1-8.

8. Seema Sharma, Jitendra Agrawal, Shikha Agarwal, & Sanjeev Sharma. (2013). Machine learning techniques for data mining: A survey.IEEE, 2, pp. 2-13.

9. Alex Krizhevsky, & Georey E. Hinton. (2011). Using very deep autoencoders for content-based image retrieval.University of Toronto - Department of Computer Science, 2(18), 34-56.

10. Wei-Lun Chao. (2011).Machine learning tutorial. Taiwan: DISP Lab, Graduate Institute of Communication Engineering, National Taiwan University, pp. 1-56.

11. Wei Wang. (2014). Big data, big challenges.IEEE, 11(3), pp. 34-56.

12. Keiron O’Sheam & Ryan Nash. (2015). An introduction to convolutional neural networks. arXiv:1511.08458v2.

13. Abdelkrim, A., & Loussaief, S. (2016). Machine learning framework for image classification.7th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT), pp. 58-61.

©

©