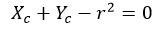

Equation can define any circle using parameters such as the Xc and Yc centre coordinates and the radius (r) that passes through each edge point

The processing speed is slow if the calculating quantity is large [10].

K-means Clustering

Using this technique, the eye image is divided into three distinct areas based on their intensity. The iris, which includes the pupil's eyelashes, is represented in the first region. The sclera and luminance reflections in the second region have high intensity values. In between these two regions and on top of the skin is the third region. Because the upper eyelid is occluded by an arc, the iris does not lose any of its useful areas.

Active Contour Models

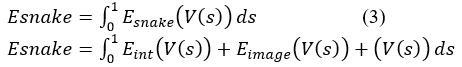

These contours are treated as deformable borders in this algorithm (not circular shape). Iris localization from the sclera and the pupil is improved. Listed below are the active contouring methods. Traditional edge detection methods are based on bending an initial shape toward an object's boundary and then evolving a curve around that object. A weighted combination of internal forces derived from snake shape and external forces derived from the image defines the energy function for defining an active contour model that is minimised.

Energy function is defined in equation (3):

Where

Eint(V(s)):represents the internal energy of the spline due to bending.

Eimag (V(s)): represents the image forces.

Econ (V(s)): represents the external constraint force.

Active Contour without Edges

The stopping function is never zero on the edges of discrete gradients, so the curve may pass through the boundary. To address this issue, a new active contour model has been developed:

The Active contour without edges model. Eyelashes and corneal reflections have no effect on this model's ability to accurately locate the pupil. Gradient Vector Flow (GVF) Snake

The outer iris boundary is the most difficult to locate, compared to the inner iris boundary. Because of the lack of intensity difference between the iris and sclera boundary, it cannot be segmented by active contour without edges model correctly. The Gradient Vector Flow Field is defined by GVF Snake as a new external force field to address this issue.

Statistical Learning Methods

The coarse iris centre and radius, the coarse iris boundaries, and the fine iris boundaries and centres are calculated using the least median of square and linear basis function in a three-step iris segmentation process. In order to reject low-quality images, such as those that are out of focus or fail to segment, it focuses on image quality evaluation.

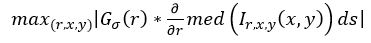

Least Median of Square Differential Operator (LMedSDO)

It is method by which we calculate coarse iris center and radius of iris inner and outer boundaries. It is more robust than IDO (Integro Differential Operator )at cost of more calculation. It is given by equation (4)

Where med stands for median

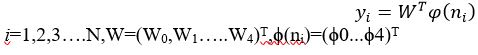

Linear Basis Function

It is used to find coarse inner and outer boundaries of iris. It is preferred because it uses trigonometric functions () where (K= 1, 2) as basis functions which provides fast and flexible computation. It is given by equation (5):

Where

RANSAC (Random Sample Consensus)

Occlusion by eyelids, eyelashes, and speculations is a common cause of out-of-focus points in an image. The following are the components of this procedure:

- All of the coarse boundary points are subject to the Linear Basis Function model.

©

©